Building our Home AI Server

AIPRODUCT VIBING

This build was designed to run AI models locally for testing and experimentation related to Product Vibing, with the goal of experimenting with LLMs open-source and paid, while having more control over the environment.

I initially planned to use an old Windows mini PC but determined through research that it didn’t meet the minimum GPU/VRAM requirements for models like LLaMA3 or Gemma. I decided to build a new machine that could.

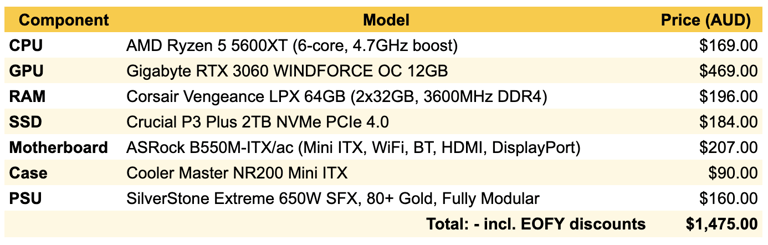

Hardware Build for $1,475 AUD

I purchased the following from www.scorptec.com.au who were having a EOFY sale.

I didn't purchase a monitor or accessories because as a server my intention was for it to sit quietly in the corner and I remote into it when needed. My son had other ideas, not that I mind.

Fun Fact: My first job out of school was at a computer store in Dunedin, NZ, where I learned to build and troubleshoot computer issues. This project was a fun return to those skills, updated with current hardware, software and my son.

Operating Environment

OS: Ubuntu 24.04 LTS

Desktop: GNOME (minimal install)

Other config:

Flatpak enabled

Snap removed

NVIDIA drivers installed

System utilities: nvtop, htop, neofetch

Software Stack

Model runner: Ollama

Interface: Open WebUI, LM Studio

Current models tested: LLaMA3 (8B), Mistral

No fine-tuning or multimodal capabilities added at this stage.

Notes

The RTX 3060 12GB allows smooth inference on 7B and some 13B quantised models.

64GB RAM provides headroom for larger models and multi-process experimentation.

The 2TB NVMe drive ensures fast model loading and local data handling.

It was a great bonding activity with my son.

This setup meets current needs and has room for future extension (e.g., LangChain, RAG workflows, multimodal experimentation).

Join the conversation

Visit my LinkedIn post: Built our Home AI Server for $1,475 AUD